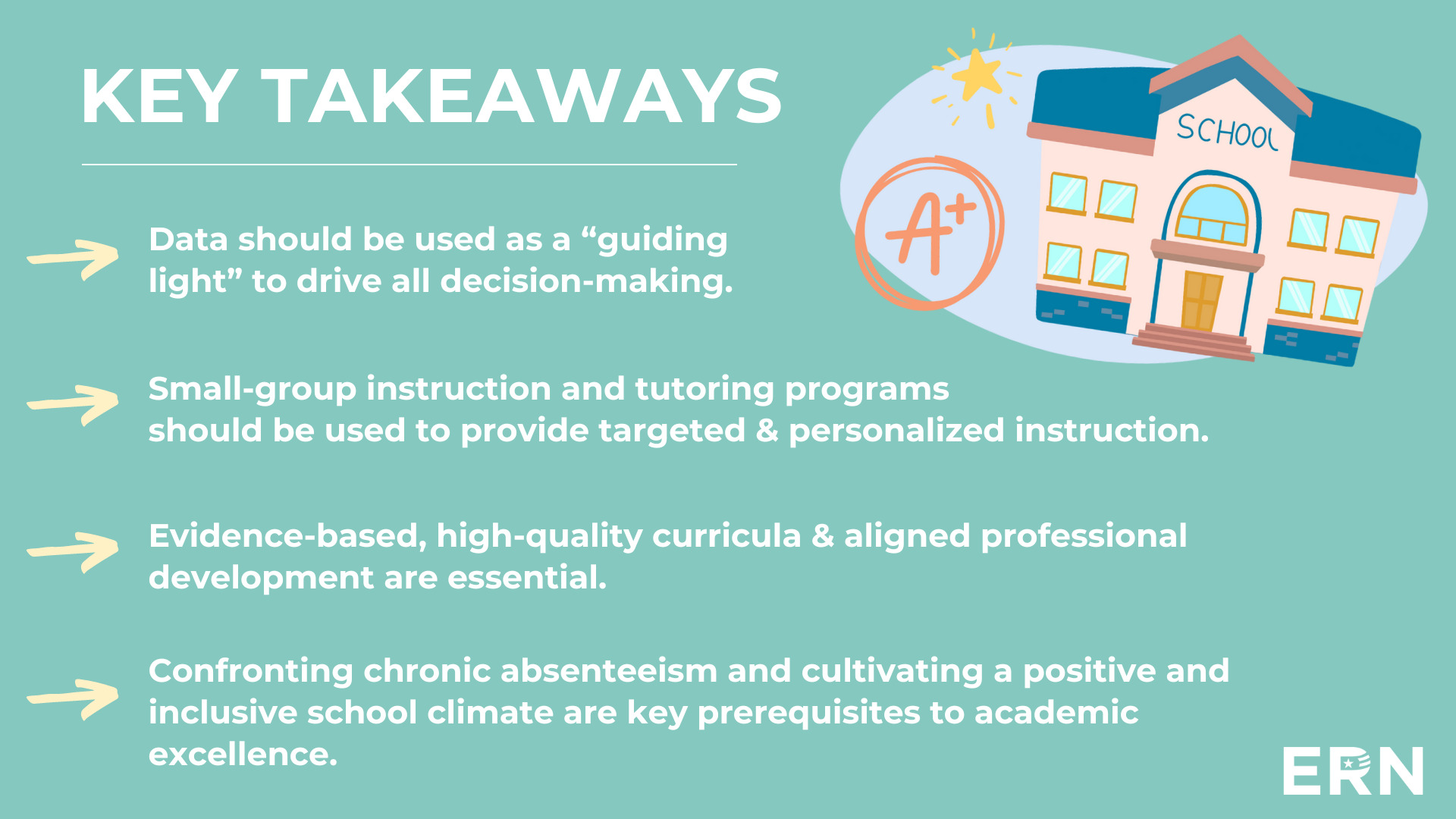

Spotlight Schools: High-Poverty Schools That Are Raising The Bar

Spotlight Schools: High-Poverty Schools That Are Raising The Bar Discover what works! Dive into the first brief of our spotlight…

Get the latest news from ERN sent Directly to your inbox.

Support ERN by donating to Education Reform Now.

Learn how we work to support students.

ERN is a network of supporters across the advocacy and policymaking arenas working to expand what works and change what is broken in the public education system.